This blog post will cover these aws services: High Availability HA, Elastic Beanstalk, Applications: SQS, SNS, API Gateway, Kinesis, Amazon MQ, Lambda, Lambda@Edge, AWS SAM (Serverless Application Model), Advanced IAM (Identity and Access Management), AWS Cognito, AWS Directory Service, AWS SSO, Disaster Recovery & Mitigation services, CI-CD: Continuous Integration / Continuous Delivery, AWS ECS.

1. High Availability:

Types of Load balancers:

- Application LB: http, https traffic.

- Network LB: load balancing of TCP or UDP traffic, use for extreme performance.

- Classic LB: legacy elastic load balancers, load balance http/https application, not intelligent as Application LB.

- if you need the IPv4 address of your end user, look for the X-Forwarded-For header.

- LBs can scale but not instantaneously – contact AWS for a “warm up”

- Troubleshooting:

- 4xx errors: are client induced errors

- 5xx errors: are application induced errors

- 503 errors: means at capacity or no registered target

- 504 errors: (gateway timeout) è check app or db server

- If the LB can’t connect to your app, check your security groups.

- Monitoring:

- ELB access logs will log all access requests (so you can debug per requests)

- CloudWatch Metrics will give you aggregate statistics (ex: connections count)

- To log trafic, Create a VPC flow log for each network interface associated with the ELB

Application Load Balancers:

- Routing tables to different target group

- Routing based on path in URL (dom.com/users, dom.com/posts)

- Routing based on hostname in URL (one.dom.com, other.dom.com)

- Routing based on Query string, headers (dom.com/users?id=123&order=false)

- App LB are great fit for micro services & container based app (Docker & Amazon ECS)

- Has a port mapping feature to redirect to a dynamic port in ECS

- In comparision, we’d need multiple Classic LB per application.

Read ELB FAQ for Classic LB è Link: https://aws.amazon.com/fr/elasticloadbalancing/faqs/

Advanced Load Balancer Theory:

- Sticky sessions:

- Stick a user session to a particular EC2 instance and make sure he will not lose his session data.

- Can be useful if you are storing information locally to that instance

- You can enable/disable it in Classic LB or Application LB.

- The cookie used for stickiness has an expiration date you control.

- Cross Zone Load balancing enables you to load balance across multiple AZ.

- For classic LB:

- Disabled by default

- No charges for inter AZ data if enabled

- For App LB:

- Always on (can’t be disabled)

- No charges for inter AZ data

- For Network LB:

- Disabled by default

- You pay charges for inter AZ data if enabled.

- For classic LB:

- Path Patterns allow you to direct traffic to different EC2 instances based on the URL contained in the request.

Auto Scaling:

- Has 3 components:

- Groups: logical where to put your EC2 instances.

- Configuration Template: instructions what to launch (AMI ID, instance type..)

- Scaling options: far example scale on schedule, number of instances..:

- Min: min number of instances that have to be there in your Autoscaling Group at all times.

- Max: max number of instances that your autoscaling group can have.

- Desired: the “current amount” of instances in your autoscaling group. An autoscaling group will start by launching as many instances as specified as the desired capacity. When scaling policies are set, the desired capacity is adjusted between the minimum and maximum amount.

- Min value =< Desired capacity =< max value.

- Vertical scaling: increase instance size (scale up:upgrade / scale down:downgrade)

- Horizontal scaling: increase number instance (scale out: + nbr / scale in -nbr)

Scaling options:

- Maintain current instance levels at all times: far example maintain 10 instances.

- Scale manually: manage process of creating or terminating the instances.

- Scale based on a schedule: monday morning, run 10 instances.

- Scale based on demand: use policies (cpu utilization…) and trigger scaling.

- Use predictive scaling: maintain optimal availability and performance by combining predictive scaling and dynamic scaling (proactive/reactive)

Auto Scaling Groups è policies

- Target tracking scaling:

- Most simple and easy setup

- Example: I want the average ASG CPU to stay at around 40%

- Simple / Step scaling

- When a CloudWatch alarm is triggered (ex CPU > 70%), then add 2 instances

- When a CloudWatch alarm is triggered (ex CPU < 30%), then remove 1 instance.

- Scheduled Actions:

- Anticipate a scaling based on knwon usage patterns

- Example: increase the min capacity to 10 at 5 pm on Fridays

Scaling Cooldowns:

- The cooldown period helps to ensure that your auto scaling group doesnt launch or terminate additional instances before the previous scaling activity takes effect.

- In addition to default cooldown for Auto scaling group, we can create cooldown that apply to a specific simple scaling policy.

- A scaling specific cooldown period overrides the default cooldown period.

- One common use for scaling specific cooldowns is with a scale in policy – a policy that terminates instances based on a specific criterai or metric. Because this policy terminates instance, Amazon EC2 Auto Scaling needs less time to determine wether to terminate additional instances.

- If the default cooldown period of 300s is too long- you can reduce costs by applying a scaling specific cooldown period of 180s to the scale in policy.

- If your app is scaling up and down multiple times each hour, modify the Auto Scaling group cooldown timers and the CloudWatch Alarm period that the scale in.

Good to know:

- ASG Default Termination Policy (Simplified version):

- Find the AZ which has the most number of instances.

- if there are multiple instances in the AZ to choose from, delete the one with the oldest launch configuration.

- ASG tries to balance the number of instances cross AZ by default.

Lifecycle Hooks:

- By default as soon as an instance is launched in an ASG it’s in service.

- You have the abitility to perform extra steps before the instance goes in service (Pending state)

- You have the abitily to perform some actions before the instance is terminated (Terminating state)

- Use case: Extract logs from instance before it is completely terminated.

HA Architecture:

– A good article to read by netflix about HA on AWS: https://goo.gl/UR2nzM

- Always design for failure.

- Use multiple AZ and multiple regions wherever you can.

- Know the difference between Multi AZ and read replicas for RDS.

- know the difference between scaling out (autoscaling) and scaling up (upgrade resource)

- Read the question carefully and always consider the cost element.

- Know the different S3 storage classes.

2. Elastic Beanstalk: Instantiating Apps quickly:

- When launching a full stack (EC2, EBS, RDS), it can take time to:

- Install Apps, Insert initial (or recovery) data.

- Configure everything, Launch the app.

To speed up the process:

- EC2 instances:

- Use a Golden AMI (apps, os dependencies,..),

- Bootstrap using User Data,

- Hybrid: mix Golden AMI and User Data (Elastic Beanstalk).

- RDS Databases: restore from a snapshot: the db will have schemas and data ready.

- EBS volumes: restore from a snapshot: the disk will already be formatted and have data.

Typical architecture Web App 3-Tier:

If you are deploying many apps, it will be a nightmare to create/update the infra as a developper, the solution is ElasticBeanstalk which is a developer centric view of deploying an app on AWS.

Three architecure models (This is general, not talking about Beanstalk):

- Single Instance deployement: good for dev

- LB + ASG: great for production or pre-production web apps

- ASG only: great for non web apps in production (workers..)

Three componenets:

- Application

- Application version: each deployement gets assigned a version

- Environment name (dev, test, prod..): free naming

3. Applications:

SQS: Simple Queueing Service.

- is pull-based not pushed-based, it queues messages,

decouple the components of an app (decouple word = think SQS) - Two types of queues:

- Standard queue.

- FIFO queue.

- Messages are 256KB in size.

- Messages can be kept in the queue from 1min to 14days the default retention is 4 days.

- Visibility timeout max is 12 hours.

- SQS guarantees that your messages will be processed at least once.

Standard Queue:

- oldest offering (over 10 years old)

- Fully managed

- Scale form 1 message per seconde to 10.000s per second

- No limit to how many messages can be in the queue

- Low latency (<10ms on publish and receive)

- Horizontal scaling in terms of number of consumers.

- Can have duplicate messages (at least once delivery, occasionally)

- Can have out of order messages (best effort ordering)

Delay Queue:

- Delay a message (consumers dont see it immediately) up to 15min

- Default is 0 seconds (message is available right away)

- Can set a default at queue level

- Can override the default using the DelaySeconds parameter

Visibility timeout:

- When a consumer polls a message from a queue, the message is “invisible” to other consumers for a defined period.. the Visibility Timeout:

- Set between 0s and 12h (default 30s)

- If too high (15min) and consumer fails to process the message, you must wait a long time before processing the message again.

- If too low (30s) and consumer needs time to process the message (2min), another consumer will receive the message and the message will be processed more than once.

- ChangeMessageVisibility API to change the visibility while processing a message

- DeleteMessage API to tell SQS the message was successfully processed.

Dead Letter Queue:

- If a consumer fails to process a message within the Visibility Timeout.. the message goes back to the queue!

- We can set a threshold of how many times a message can go back to the queue – it’s called a “redrive policy”

- After the threshold is exceeded, the message goes into a dead letter queue (DLQ)

- We have to create a DLQ first and then designate it dead letter queue

- Make sure to process the messages in the DLQ before they expire!

Long Polling

- When a consumer requests message from the queue, it can optionnaly “wait” for messages to arrive if there are none in the queue

- This is called Long Polling

- LongPolling decreases the number of API calls made to SQS while incresing the efficiency and latency of your application

- The wait time can be between 1s to 20s (20s is preferable)

- Long Poling is preferable to Short Polling

- Long Polling can be enabled at the queue level or at the API level using WaitTimeSeconds.

FIFO Queue:

- Newer offering (First In – First Out) – not available in all regions

- Name of the queue must end in .fifo

- Lower throughput (up to 3000 per second with batching, 300/s without)

- Messages are processed in order by the consumer

- Messages are sent exactly once

- No per message delay (only per queue delay)

- Ability to do content based de-duplication (do not accept duplicate message)

- 5min interval deduplication using “Duplication ID”

SWFS: Simple Workflow Service

- it makes it easy to coordinate work across distributed apps, web service calls, executable code, human actions and scripts.

- workflow executions can last 1 year.

- SWF keeps track of all the tasks and events in the app.

- think tasks and workflow.

- SWFS Actors: workflow starters, deciders, activity workers.

SNS:Simple Notification Service

- It allows you to push notifications to Apple, google, FireOS, and windows devices, as well as Android devices in china with Baidu cloud push.

- notify by sms, text message.

- SNS Benefits:

- Instantaneous, push-based delivery (no polling)

- Simple APIs and easy integration with apps.

- Flexible message delivery over multiple transport protocols.

- Inexpensive, pay as you go model with no upfront costs.

- Web based AWS mgmt console offers the simplicity of a point and click intreface

- SNS (Push) and SQS (Polls/Pulls)

- The “event producer” only sends message to one SNS topic.

- As many “eventreceivers” (subscriptions) as we want to listen to the SNS topic notifications

- Each subscriber to the topic will get all the messages (note: new feature to filter mesages)

- Up to 10.milions subscriptions per topic

- 000 topics limit

- Subsribers can be:

- SQS

- HTTP/HTTPS (with delivery retries – how many times)

- Lambda

- Emails

- SMS messages

- Mobile notifications

SNS + SQS: Fan Out (important for exam)

- Push once in SNS, receive in many SQS

- Fully decoupled

- No data loss

- Ability to add receivers of data later

- SQS allows for delayed processing

- SQS allows for retries of work

- May have many workers on one queue and one worker on the other queue.

Elastic Transcoder:

- Media transcoder in the cloud.

- Convert media files from their original source format into different formats that will play on smartphones, tablets, PCs..

- Provides transcoding presets for popular output formats.

- Pay based on the minutes that you transcode and the resolution at which you transcode.

API Gateway:

- a door way to your AWS environment (connect Lambda).

- it can expose https endpoints to define a RESTful API.

- Serveless-ly connect to services like Lambda & DynamoDB

- Send each API endpoint to a different target.

- Run efficiently with low cost.

- Scale effortlessly.

- Track and control usage by API key

- Throttle requests to prevent attacks

- Connect to CloudWatch to log all requests for monitoring.

- Maintain multiple versions of your API

- If you are using JS/AJAX that uses multiple domains with API gateway, ensure that you have enabled CORS on API gateway.

- CORS is enforced by the client side.

- Enable API caching in Amazon API Gateway to cache your endpoint’s response.

- To avoid repeated requests and reduce latency, create a cache for a stage and configure a TTL

- If you have an “Error: Origin policy cant be read at the remote resource”, you need to enable CORS on API gateway.

API Gateway – Security (Important for Exam)

- IAM Permissions:

- Create an IAM policy authorization and attache to User / Role

- API gateway verifies IAM permissions passed by the calling application

- Good to provide access within your own infrastructure

- Leverages “Sig v4” capability where IAM credential are in headers.

- Lambda Authorizer (formery Custom Authorizers):

- Uses AWS Lambda to validate the token in header being passed

- Option to cache result of authentication

- Helps to use OAhth / SAML / 3rd party type of authentication

- Lambda must return an IAM policy for the user

- Cognito User Pools:

- Cognito fully manages user lifecycle

- API gateway verifies identity automatically from AWS Cognito

- No custom implemenetation required

- Cognito only helps with authentication, not autorization

IAM vs Custom Authorizer vs Cognito User Pool:

- IAM:

- Great for users, roles already within your AWS account

- Handle authentication + authorization

- Leverage Sig v4

- Custom Authorizer:

- Great for 3rd party token

- Very flexible in terms of what IAM policy is returned

- Handle Authentication + Authorization

- Pay per Lambda invocation

- Cognito User Pool:

- You manage your own user pool (can be backed by fb, google..)

- No need to write any custom code

- Must implement authorization in the backend

Kinesis:

- A platform on AWS to send your streaming data.

- Kinesis is a managed alternative to Apache Kafka

- Great for app logs, metrics, IoT, clickstreams.

- Great for real time big data.

- Data is automatically replicated to 3 AZ

- 3 types of Kinesis:

- Kinesis streams (24h-7days retention): different sources streams data to Kinesis, data is contained in “Shard”, EC2 “Consumers” can analyze these Shard then store it in DynamoDB, S3, Redshift, EMR.

- Kinesis Firehose (S3): (no data persistent) different sources send data to Kinesis Firehose, data is analyzed then output it to S3 or other destinations (Splunk, Redshift, ElasticSearch)

- Kinesis Analytics: store and analyze data on the fly inside kinesis, then send it to Redshift, ElasticSearch cluster, S3..

Kinesis Streams overview:

- Streams are divided in ordered Shards / Partitions

- Data retention is 1 day by default, can go up to 7 days

- Abitility to reprocess / replay data

- Multiple apps can consume the same stream

- Real time processing with scale of throughput

- Once data is inserted in Kinesis, it cant be deleted (immutability)

Kinesis Data streams vs Firehose

- Streams:

- Going to write custom code (producer / consumer)

- Real time (~200ms)

- Must manage scaling (Shard splitting / merging)

- Data storage for 1 to 7 days, replay capability, multi consumers

- Firehose:

- Fully managed, send to S3, Splunk, Redshift, ElasticSearch

- Serverless data transformations with Lamba

- Near real time (lowest buffer time is 1 minute)

- Automated scaling

- No data storage

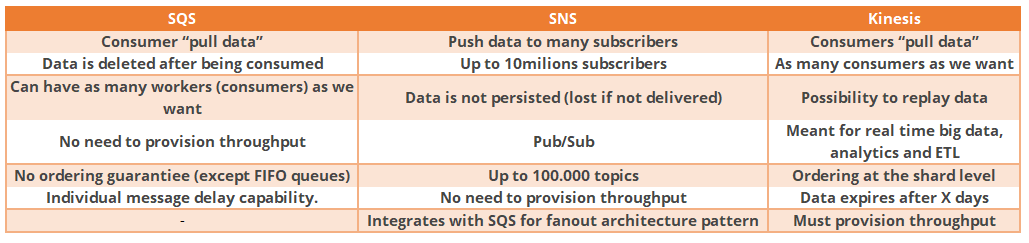

SQS vs SNS vs Kinesis:

Amazon MQ (important for exam)

- SQS, SNS are cloud native services and they are using proprietary protocols from AWS

- Traditional apps runing on premise may use open protocols such as: MQTT, AMQP, STOMP, Openwire, WSS

- When migrating to the cloud, instead of re-engineering the app to use SQS and SNS, we can use Amazon MQ

- Amazon MQ: managed Apache ActiveMQ.

This blog post will covers aws services related to Lambda, SAM, Advanced IAM, Disaster recovery and migration services.

1. Lambda:

- History of Cloud:

Histort image - Lambda is the ultimate abstraction layer:

Datacenter, hardware, assembly code/protocols; high level languages, os, app

layer/AWS APIs, AWS Lambda

- You can use Lambda in the following ways:

- as an event-driven compute service.

- as a compute service to run your code in response to HTTP requests.

- Lamda supports Node.js, Java, Python, C#, Go, Powershell.

- Serverless Architecture: always have an API gateway, and lambda.

- AWS XRay can debug Serverless architecture.

- It is priced:

- Number of requests: 1st 1milion requests are free, then 0.20isd per 1milion requests thereafter.

- Duration: it is calculated from the time your code begins executing, it returns or otherwise terminates, rounded up to the nearest 100ms. you are charged 0.00001667usd for every GB second used.

- Why Lambda is cool:

- no servers, continuous scaling, cheap

- CloudWatch for lambda metrics:

- Latency per request

- Total number of requests

AWS Lambda Limits to know:

- Execution:

- Memory allocation: 128MB – 3008MB -64MB increments)

- Maximum execution time: 300s (5minutes) ç now 15min, but the exam assumes 5min

- Disk capacity in the “function container” (in/tmp): 512MB

- Concurrency limits: 1000

- Deployement:

- Lambda function deployment size (compressed .zip): 50MB

- Size of uncompressed deployement (code + dependencies=: 250MB

- Can use the /tmp directory to load other files at startup

- Size of environment variables: 4KB

Lambda@Edge:

- You have deployed a CDN using CloudFront

- What if you want to run a global AWS Lamba alongside?

- Or how to implement request filtering before reaching your applicaiton?

- For this, you can use Lambda@Edge:

deploy Lamba functions alongside your CloudFront CDN

- Build more responsive apps

- You dont manage servers, Lambda is deployed globally

- Customize the CDN content

- Pay only for what you use

- You can use Lambda to change CloudFront requests and responses:

- After CloudFront receives a request from a viewer (viewer request)

- Before CloudFront forwards the request to the origin (origin request)

- After CloudFront receives the response from the origin (origin response)

- Before CloudFront forwardsthe response to the viewer (viewer reesponse)

- You can also generate repsnses to vieuwers without ever sending the request to the origin

Use cases:

- Website security and privacy

- Dynamic Web Applicaition at the Edge

- Search Engine Optimization (SEO)

- Intelligently Route Across Origins and Data Centers

- Bot Mitigation at the Edge

- Real time image transformation

- A/B testing

- User authentication and authorization

- User priorization

- User tracking and analytics

4. AWS SAM – Serverless Application Model

- Framework for devlopping, testing and deploying serverless applications

- All the configuration is YAML code

- Lambda Function

- DynamoD

- API Gateway

- Cognito User Pools

- SAM can help you to run Lambda, API Gateway, DynamoDB locally.

- SAM can use CodeDeploy to deploy Lambda functions

5. Example of Serverless Architecture:

.: Mobile App: MyTodolist:

- We want to create a mobile app with the following requirements:

- Expose as REST API with HTTPS

- Serverless architecture

- Users should be able to directly interact wth their own folder in S3

- Users should authenticate through a managed serverless serivce

- The users can write and read todos, but they mostly read them

- The database should scale, and have some high read throughput.

Mobile app: REST API layer:

Mobile app: giving users access to S3

!!Common question exam: store credential in cognito/STS not in mobile device.

Mobile app: high read throughput, static data

Mobile app: caching at the API Gateway:

.: Serverless hosted website: MyBlog.com:

- This website should scale globally

- Blogs are rarely written, but often read

- Some of the website is purely static files, the rest is a dynamic REST API

- Caching must be implemented where possible

- Any new users that subscribes should receive a welcome email

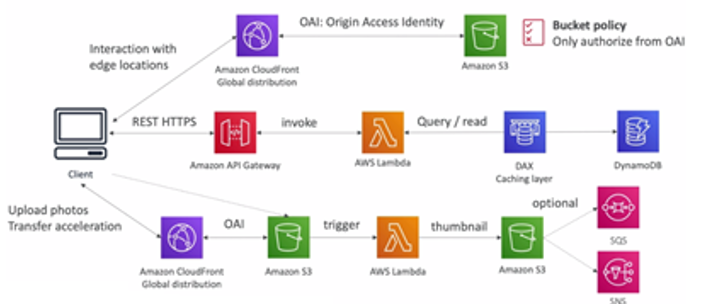

- Any photo uploaded to the blog should have a thumbnail generated

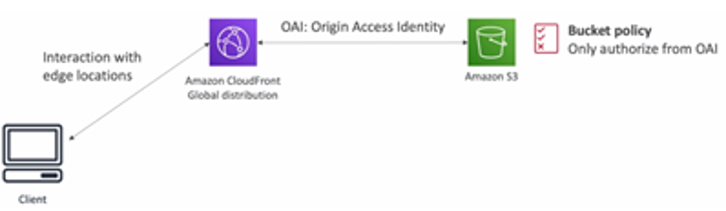

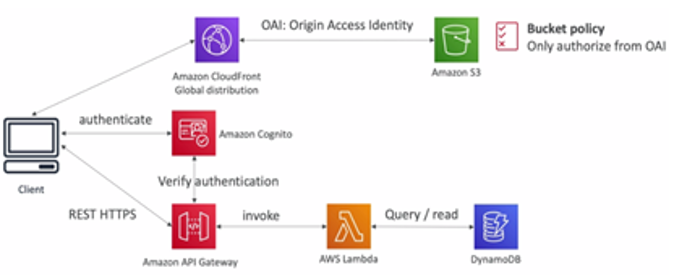

Serving static content, globally, securely:

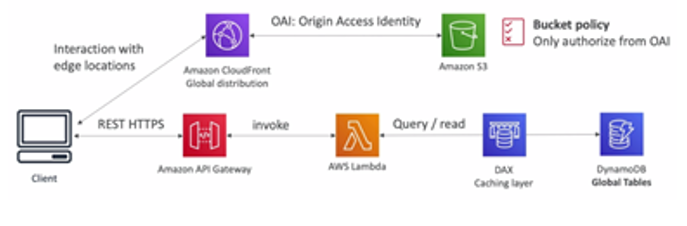

Adding a public serverless REST API

Leveraging DynamoDB Global Tables

User Welcome email flow

Thumbnail Generation flow:

.: Distributing paid content

- We sell videos online and users have to pay to buy videos

- Each videos can be bought by many different customers

- We only want to distribute videos to users who are premium users

- We have a database of premium users

- Links have a database of premium users

- Our application is global

- We want to be fully serverless

Start simple, premium user service:

Distributed Globally and Secure:

Distribute Content only to premium users:

Premimum User Video Service:

- We have implemented a fully serverless soltion:

- Cognito for athentication

- DynamoDB for storing users that are premium

- 2 serverless applications

- Premium User registration

- CloudFront Signed URL generator

- Content is stored in S3 (serverless and scalable)

- Integrated with CloudFront with OAI for security (users cant bypass)

- CloudFront can only be used using Signed URLs to prevent authorized users

- What about S3 Signed URL? They are not efficient for global access.

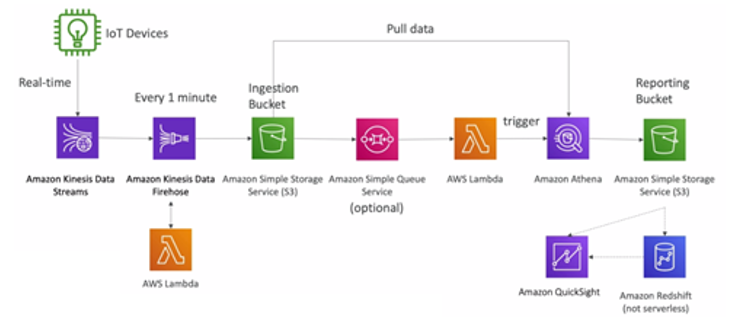

.: Big Data Ingestion Pipeline:

- We want the ingestion pipeline to be fully serverless

- We want to collect data in real time

- We want to transform the data

- We want to query the transformed data using SQL

- The reports created using the queries should be in S3

- We want to load that data into a warehouse and create dashboards.

Big Data Ingestion Pipeline:

- IoT Core allows you to harvest data from IoT devices

- Kinesis is great for real time data collection

- Firehose helps with data delivery to S3 in near real time (1min)

- Lambda can help Firefose with data transformations

- Amazon S3 can trigger notifications to SQS

- Lambda can subscribe to SQS (we could have connect S3 to Lambda)

- Athena is a serverless SQL service and results are stored in S3

- The reporting bucket contains analyzed data and can be used by reporitng tool such as AWS QuickSight, Redshift, etc…

6. Identity and Access Management (IAM) – Advanced:

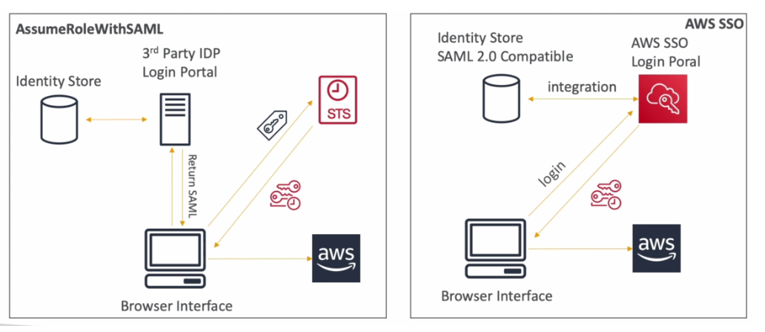

AWS STS: Security Token Service:

- Allows to grant limited and temporary access to AWS resources.

- Token is valid for up to one hour (must be refreshed)

- AssumeRole:

- Within your own account: for enhanced security

- Cross Account Access: assume role in target account to perfom action there

- AssumeRoleWithSAML:

- return credentials for users logged with SAML

- AssumeRoleWithWebIdentity

- returns creds for users loggeed with an IdP (fb, google, OIDC compatible..)

- AWS recommends against using this, and using Cognito instead

- GetSessionToke,

- for MFA, from a user or AWS account root user.

Identity Federation in AWS:

Federation lets users outside of AWS to assume temporary role for accessing AWS resources.

Federation lets users outside of AWS to assume temporary role for accessing AWS resources.- These users assume identity provided access role

- Federation can have many flavors:

- SAML 2.0 (AD, ADFS)

- Customer Identity Broker

- Web Identity Federation with Amazon Cognito

- Signe Sign On

- Non SAML with AWS MS AD

- Using federation, you don’t need to create IAM users (user management is outside AWS)

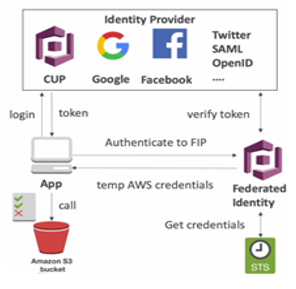

AWS Cognito:

Global: Provide direct access to AWS Resources from the Client Side (mobile, web app)

Global: Provide direct access to AWS Resources from the Client Side (mobile, web app)- Example: provide (temporary) access to write to S3 bucket using facebook login

- Problem: We don’t want to create IAM users for your app users

- How:

- Log in to federated identity provider or remain anonymous

- Get temporary AWS credentials back from the Federated Identity Pool

- These credentials come with a predefined IAM policy stating their permissions.

- User pools: user directories: signup and signin, account recovery.

- Identity pools: provide temporary AWS credentials to access AWS services like S3 or DynamoDB. (grant auth)

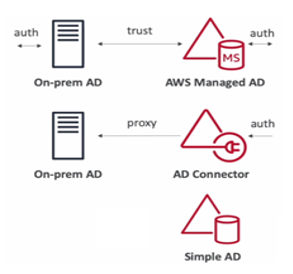

AWS Directory Services:

- AWS Managed MS AD:

- Create your own AD In AWS, manage users locally, support MFA

- Establish “trust” connection with your on-permise AD

- AD Connector:

- Directory Gateway (proxy) to redirect to on-premise AD

- Users are managed on the on-premise AD

- Simple AD:

- AD compatible managed directory on AWS

- Cannot be joined with on-premise AD

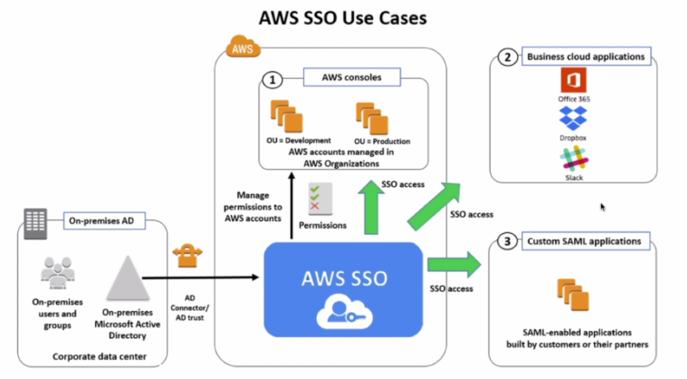

AWS Single Sign-On (SSO) (exam)

Centrally manage SSO to access multiple accounts and 3rd party business apps

Centrally manage SSO to access multiple accounts and 3rd party business apps- Integrated with AWS Organizations

- Supports SAML 2.0 markup

- Integration with on-premise AD

- Centralized permission management

- Centralized auditing with CloudTrail

SSO use case: Setup with AD:

SSO vs AssumeRoleWithSAML

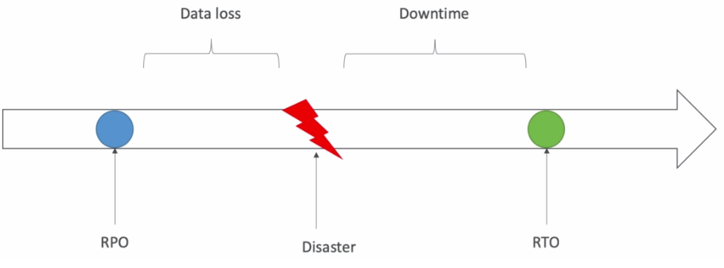

7. Disaster Recovery & Mitigation services:

- Overview:

- RPO: Recovery Point Objective

- RTO: Recovery Time Objectif

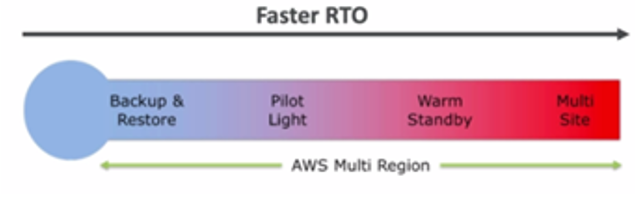

- Disaster Recovery Strategies:

- Backup and restore

- Pilot Light

- Warm standby

- Hot site / Multi site Approach

Migration services: (Important for exam)

- Migrate the same DB engine è use AWS DMS: Database Migration Service

- Migrate different DB engine è AWS Schema Conversion Tool (SCT)

- Example: Migration: Oracle to Redshift

- Convert the schema using the AWS Schema Conversion Tool

- Migrate the database using the AWS Database Migration Service (DMS)

On Premise strategy with AWS:

Transferring large amount of data into AWS:

- High Performance Computing (HPC): (Exam)

- The cloud is the perfect place to perform HPC

- You can create a very high number of resoruces in no time

- You can speed up time to results by adding more resources.

- You can pay only for the systems you have used.

- Perform genomics, computational chemistry, financial risk modeling, weather prediction, machine learning, deep learning, autonomous driving.

Which services help perfom HPC ?

- Data Management & transfer:

- AWS Direct Connect: Move GB/s of data to the cloud over a private secure network

- Snowball & Snowmobile: Move PB of data to the cloud

- AWS DataSync: Move large amount of data between on-premise and S3, EFS, FSx for Windows.

- Compute & networking:

- EC2 Instances:

- CPU optimized, GPU optimized.

- Spot instances / Spot Flets for cost saving + Auto Scaling

- EC2 Placement Groups: Cluster for good network performance

- EC2 Enhanced Networking (SR-IOV)

- Higher bw, higher PPS (packer per second, lower latency

- Option1: Elastic Network Adapter (ENA), up to 100GBps

- Option2: Intel 82599 VF, up to 10GBps – LEGACY

- Elastic Fabric Adapter (EFA)

- Improved ENA for HPC only works for Linux

- Great for inter-node communications, tighly coupled workloads

- Leverages Message Passing Interface (MPI) standard

- Bypass the underlying Linux OS to provide low latency reliable transport

- Storage:

- Instance-attached storage:

- EBS: scale up to 64000 IOPS with Provisionned IOPS

- Instance Store: Scale to milions of IOPS, linked to EC2 instance, low latency

- Network Storage:

- S3: large bloc, not a file system

- EFS: sclae IOPS based on total size or use provisioned IOPS

- FSx for Lustre: HPC optimized distributed file system, milions of IOPS

- Backed by S3.

- Automation & Orchestration:

- AWS Batch

- AWS batch support multinode parallel job, which enables you to run single job that span multiple EC2 instances.

- Easy schedule jobs and launch EC2 instances accordingly

- AWS ParallelCluster

- Open source cluster management tool to deploy HPC on AWS

- Configure with text files

- Automate creation of VPC, Sbnet, cluster type and instance types.

- AWS Batch

- Instance-attached storage:

- EC2 Instances:

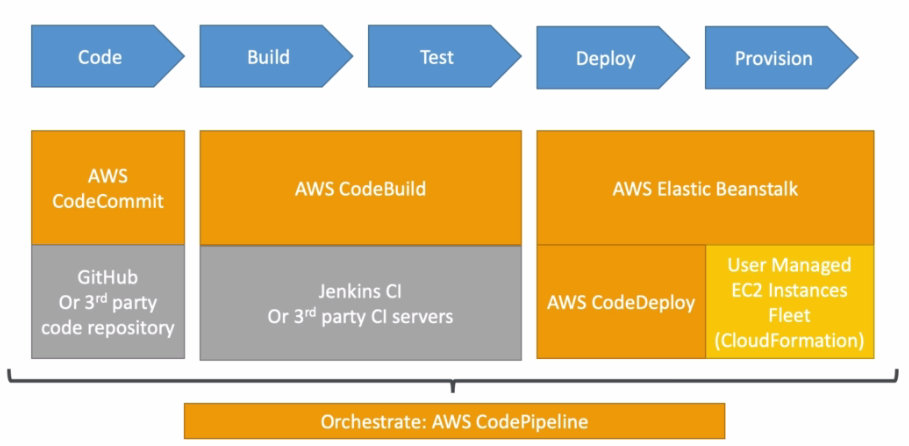

8. CI-CD: Continuous Integration / Continuous Delivery

Technology stack for CI CD:

AWS ECS – Elastic Container Service:

- ECS is a container orchestration service

- ECS helps you run Docker containers on EC2 machines

- ECS is complicated, and made of:

- ECS Core: Running ECS on user provisionned EC2 instances

- Fargate: running ECS tasks on AWS provioned compute (serverless)

- EKS: running ECS on AWS powered Kubernets (running on EC2)

- ECR: Docker container registry hosted by AWS

- ECS & Docker are very popular for microservices

- For now, for the exam, only “ECS Core” & ECR is in scope

- IAM security and roles at the ECS task level

What’s Docker?

- Docker is a container technology

- Run a containerized application on any machine with Docker installed

- Container allows our application to work the same way anywhere

- Containers are isolated from each other

- Control how much memory / CPU is allocated to your container

- Ability to restrict network rules

- More efficien than Virtual machines

- Scale containers up and down very quickly (seconds)

AWS ECS – use cases

- Run microservices:

- Ability to run multiple docker containers on the same machine

- EAsy service discovery features to enhance communication

- Direct integration with Appliation Load Balancers

- Auto scaling capability

- Run batch processing / scheduled tasks

- Schedule ECS containrs to run on Demand / Reserved / Spot instances

- Migrate applications to the cloud

- Dockerize legacy applications running on premise

- Move Docker containers to run on ECS

Amazon EMR:

- EMR Stands for “Elastic MapReduce”

- EMR helps creating Hadoop clusters (Big Data) to analyze and process vast amount of data.

- The clusters can be made of hundreds of EC2 instances.

- Also supports Apache Spark, HBase, ..

AWS Glue

- Fully managed ETL (Extract, Transform & Load) service

- Automating time consuming steps of data preparation for analytics.

- Is Serverless

AWS Opsworks:

- Chef & Puppet help you perform server configuration automaticaly or repetetive actions

- AWS Opsworks: Managed Chef & Puppet

AWS Workspaces:

- Manage, secure cloud desktop.

- Great to eliminate management of on-premise VDI (Virtual Desktop Infrastructure)

- On demand, pay per usage.

AWS AppSync:

- Store and sync data across mobile and web apps in real-time.

- Makes use of GraphQL (mobile technology from fb)

- Client Code can be generated automatically

- Integrations with DynamoDB / Lambda

- Real time subscriptions

- Offline data synchronization (replaces Cognito sync)

- Fine Grained Security

Run Command:

- A tool can be run from the AWS Management Console to execute the script on all target EC2 instances: powershell..

Some official userfull links to boost your skills on AWS architectures:

https://www.wellarchitectedlabs.com/ , it contains Hands on with scripts..

https://www.vpcendpointworkshop.com/

https://aws.amazon.com/fr/architecture/

https://aws.amazon.com/architecture/well-architected